Tesla World Simulator Debuts at ICCV! VP Personally Deciphers the End-to-End Autonomous Driving Technology Roadmap

default / 2021-11-15

Tesla World Simulator is here!

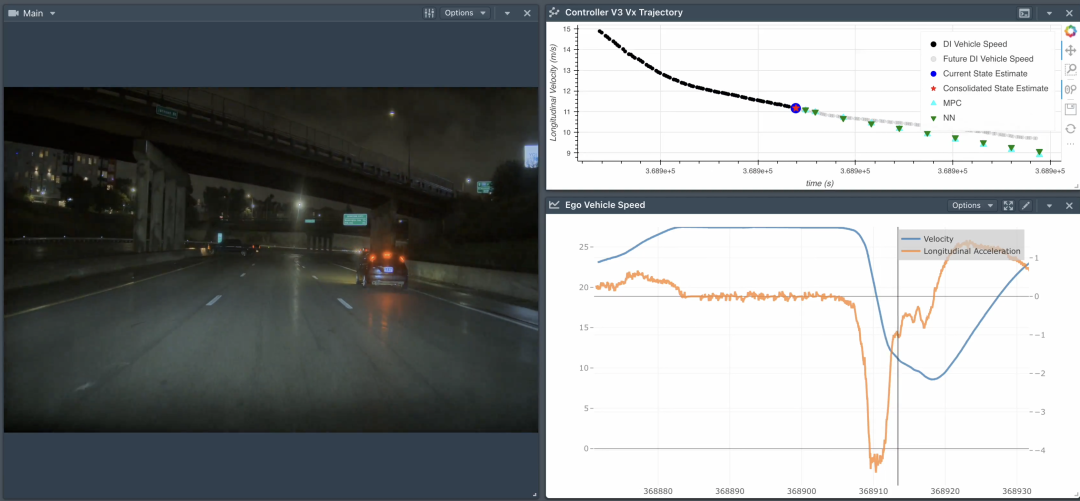

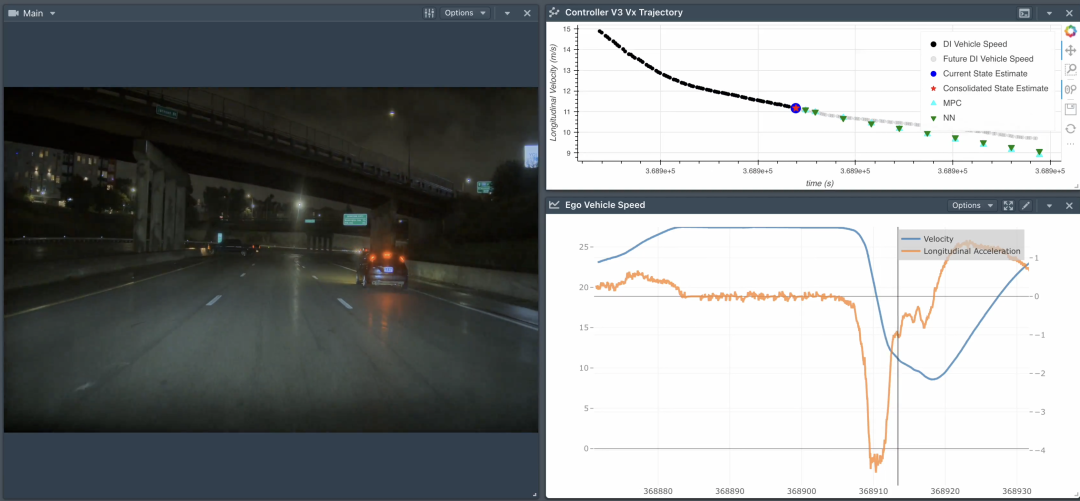

These seemingly realistic driving scenarios are all generated by the simulator:

This simulator made its debut at ICCV, the top conference on computer vision this year, and was personally explained by Ashok Elluswamy, Tesla's Vice President of Autonomous Driving.

After seeing it, netizens commented that this model is absolutely awesome.

Meanwhile, Elluswamy also revealed Tesla's autonomous driving technology roadmap for the first time, stating that end-to-end is the future of intelligent driving.

World Simulator Generates Autonomous Driving ScenariosIn addition to the multi-scenario driving videos seen at the beginning, Tesla's World Simulator can also generate new challenging scenarios for autonomous driving tasks.

For example, a vehicle on the right suddenly changes lanes twice in a row, intruding into the preset driving path.

It can also enable AI to perform autonomous driving tasks in existing scenarios, avoiding pedestrians and obstacles.

The scenario videos generated by the model can not only be used for autonomous driving models to practice in, but also serve as video games for humans to play and experience.

Naturally, beyond driving-related scenarios, it is equally useful for other embodied intelligence applications—such as Tesla's Optimus robot.

Revealed alongside this model is Tesla's entire set of methodologies in autonomous driving.

Tesla VP: End-to-End is the Future of Autonomous DrivingIn his ICCV speech, Ashok Elluswamy, Tesla's Vice President of Autonomous Driving, revealed technical details of Tesla's FSD and also published a text version on X.

Ashok first made it clear that end-to-end AI is the future of autonomous driving.

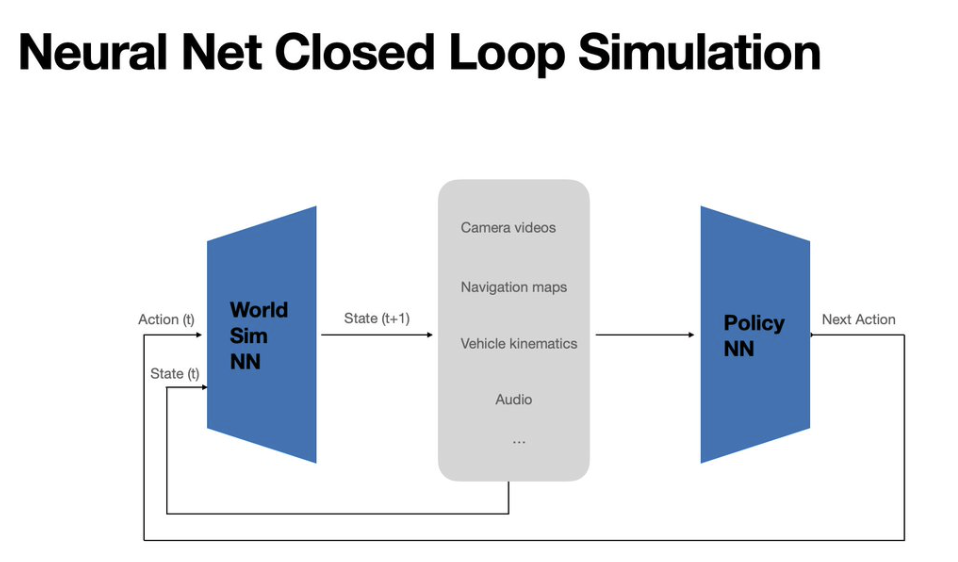

Tesla utilizes an end-to-end neural network to achieve autonomous driving. This end-to-end neural network leverages data from various cameras, motion signals (such as vehicle speed), audio, maps, etc., to generate control commands for driving the vehicle.

In contrast to the end-to-end approach, another method is modular driving that employs a large number of sensors. The advantage of such systems is that they are easier to develop and debug in the initial stage, but the end-to-end approach has more prominent advantages by comparison:

It is extremely difficult to regularize human values into rules, but it is easier to learn them from data;

In modular methods, the interface definitions between perception, prediction, and planning are unclear, whereas in end-to-end systems, gradients flow all the way from controls to sensor inputs, thereby optimizing the entire network as a whole;

The end-to-end method can be easily scaled to handle the heavy and long-tail problems of real-world robots;

End-to-end systems feature homogeneous computing with deterministic latency.

Ashok provided some examples. For instance, when a vehicle detects water accumulation on the road ahead while driving, there are two strategies: one is to drive directly through the water, and the other is to use the oncoming lane to bypass the water.

Driving into the oncoming lane is dangerous, but in this specific scenario with a clear view, if there are no vehicles in the oncoming lane within the distance required to avoid the puddle, using the oncoming lane to bypass the puddle is a feasible option.

Such trade-offs are difficult to express with traditional programming logic, yet for humans, they are quite straightforward when observing a scenario.

Based on the above considerations and other factors, Tesla has adopted an end-to-end autonomous driving architecture. Of course, end-to-end systems still have many challenges to overcome.

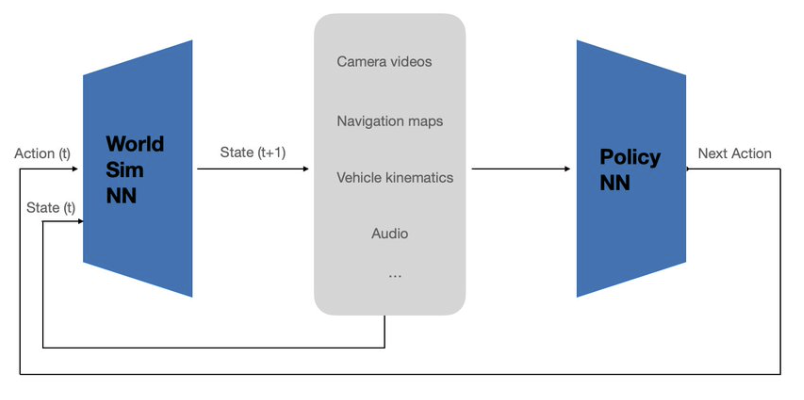

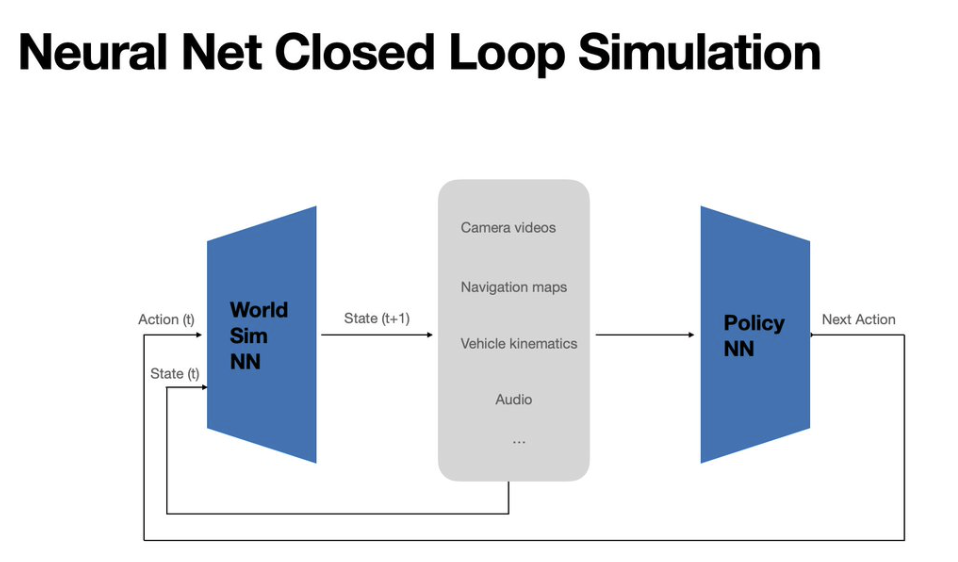

One of the difficulties faced by end-to-end autonomous driving is evaluation. Tesla's World Simulator is precisely designed to tackle this problem.

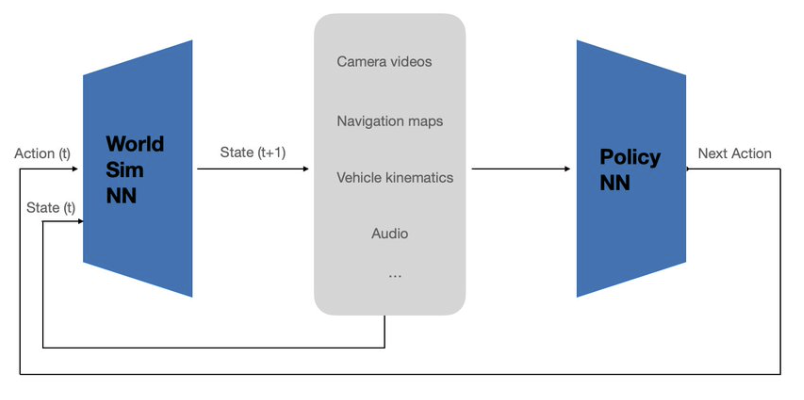

The simulator is trained using the same massive dataset curated by Tesla. Its function is not to predict actions under a given state, but to synthesize future states based on the current state and the next action.

Such states can be connected to an agent or a policy AI model, operating in a closed-loop manner to evaluate performance.

Meanwhile, these videos are not limited to evaluation; they can also be used for closed-loop large-scale reinforcement learning, thereby achieving performance that surpasses human capabilities.

Beyond evaluation, end-to-end autonomous driving also faces issues such as the "curse of dimensionality," as well as challenges related to interpretability and safety guarantees.

In the real world, for an autonomous driving system to operate safely, it needs to process high-frame-rate, high-resolution, and long-context inputs.

Assuming the input information includes scene footage from 7 cameras × 36 FPS × 5 megapixels × 30 seconds, navigation maps and routes for the next few miles, motion data at 100 Hz, and audio data at 48 KHz, there would be approximately 2 billion input tokens.

Neural networks need to learn the correct causal mapping to distill these 2 billion tokens into 2 outputs: the vehicle's next steering and acceleration actions. Learning the correct causal relationships without acquiring spurious correlations is an extremely challenging problem.

To address this, Tesla collects data equivalent to 500 years of driving experience every day through its massive fleet, and uses a sophisticated data engine to filter the highest-quality data samples.

Training with such data enables the model to achieve a high level of generalization, thereby handling extreme scenarios.

Regarding the issues of interpretability and safety, if a vehicle's behavior is not as expected, debugging an end-to-end system can be difficult. However, the model can also generate interpretable intermediate tokens, which can be used as reasoning tokens depending on the situation.

Tesla's Generative Gaussian Splattering is such a task. It boasts excellent generalization ability, can model dynamic objects without initialization, and can be jointly trained with end-to-end models.

All the Gaussian functions herein are generated based on the cameras configured in mass-produced vehicles.

In addition to 3D geometry, reasoning can also be performed through natural language and video contexts. A small version of this reasoning model is already running in FSD v14.x.

For more technical details, you can explore Ashok’s article and the original speech video.

While end-to-end is regarded as the future of autonomous driving, the industry has long debated the specific software algorithm route between VLA (Vision-Language-Action) and World Model.

Taking China as an example, Huawei and NIO are representatives of the World Model route, while DeepRoute.ai and Li Auto have chosen the VLA route. Additionally, some players believe the two should be combined.

Proponents of VLA argue that this paradigm can, on the one hand, leverage the massive existing data from the internet to accumulate rich common sense and further understand the world. On the other hand, models gain chain-of-thought capabilities through language proficiency, enabling them to comprehend long-sequence data and perform reasoning.

A more pointed view suggests that some manufacturers avoid VLA simply due to insufficient computing power to support VLA models.

Advocates of the World Model insist it is closer to the essence of the problem. For instance, Jin Yuzhi, CEO of Huawei’s Car Business Unit, stated, “Paths like VLA may seem clever, but they cannot truly lead to autonomous driving.”

Now, Tesla’s solution has garnered significant attention precisely because Musk has never “chosen the wrong path” in the development of autonomous driving.

Which route will Tesla take—VLA or World Model? This marks a historic showdown between the two major technical routes of end-to-end autonomous driving.

Do you favor VLA or the World Model?